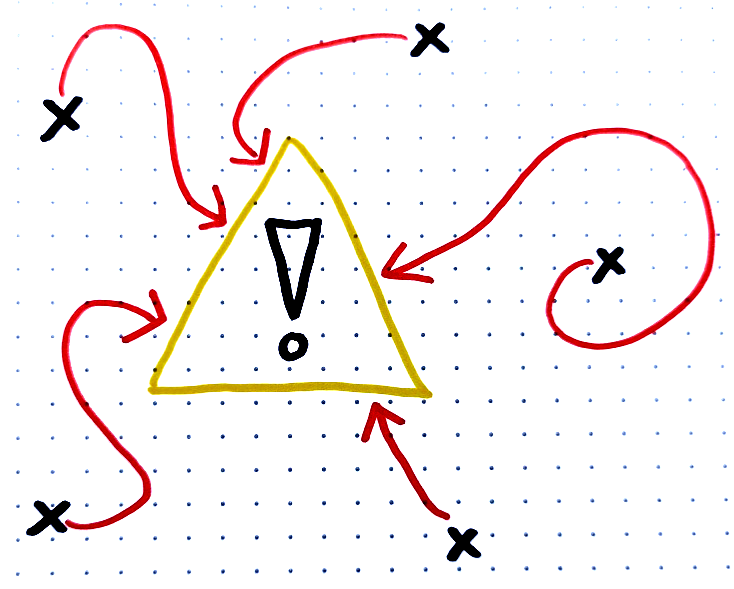

◎ There are some instrumental sub-goals (representend by the warning sign) that many intelligent agents (the crosses) will want to pursue, mostly independent of their final goals.

◎ There are some instrumental sub-goals (representend by the warning sign) that many intelligent agents (the crosses) will want to pursue, mostly independent of their final goals.

Basic

Instrumental convergence means that humans, or any other entities, with different ultimate goals will often adopt similar intermediate strategies, known as instrumental goals, to help them achieve their ultimate goals.

For example, three friends who are training to be a scientist, lawyer and athlete respectively will all benefit from time-management skills to juggle their responsibilities, social skills to build useful networks, and a healthy amount of sleep to recover from their busy schedules.

In the context of AI, this implies that AI systems will be motivated to achieve certain instrumental goals, such as resource acquisition, improved capabilities, and ultimately self-preservation to prevent being shut down.

For example, if a highly intelligent robot was programmed to fetch coffee it would resist attempts to be shut off despite not being designed for self-preservation, simply because “you can’t fetch the coffee if you are dead”, as Stuart Russell puts it. Self-preservation is an instrumental goal that ensures the ultimate goal can be reached.

Intermediate

The concept of instrumental convergence is important to understand when considering the likely goals of advanced AI systems. It describes how various intelligent agents with different ultimate objectives are likely to converge on similar intermediate or “instrumental” goals that are essential for achieving their respective end goals. An example of this is two different ML systems: one designed to develop personalized medicine and another for algorithmic trading. While their ultimate goals are vastly different, they might both benefit from instrumental goals like minimizing latency, maximizing data throughput, or avoiding overfitting. These are convergent instrumental objectives because they are generally useful across different optimization problems.

More general and advanced ML systems could potentially share instrumental goals which pose significant risks to humanity, such as resource acquisition, self-preservation, and elimination of threats. It isn’t hard to imagine that a system which pursues these goals would constitute a threat to humanity, as it would actively avoid being shut down, and seek to prevent other agents from meddling in its interests.

Advanced

- https://arbital.com/p/instrumental_convergence/

- https://www.lesswrong.com/tag/instrumental-convergence

- https://philosophicaldisquisitions.blogspot.com/2014/07/bostrom-on-superintelligence-2.html

- Why Would AI Want To Do Bad Things https://www.youtube.com/watch?v=ZeecOKBus3Q

- Optimal Policies Tend To Seek Power https://arxiv.org/pdf/1912.01683.pdf