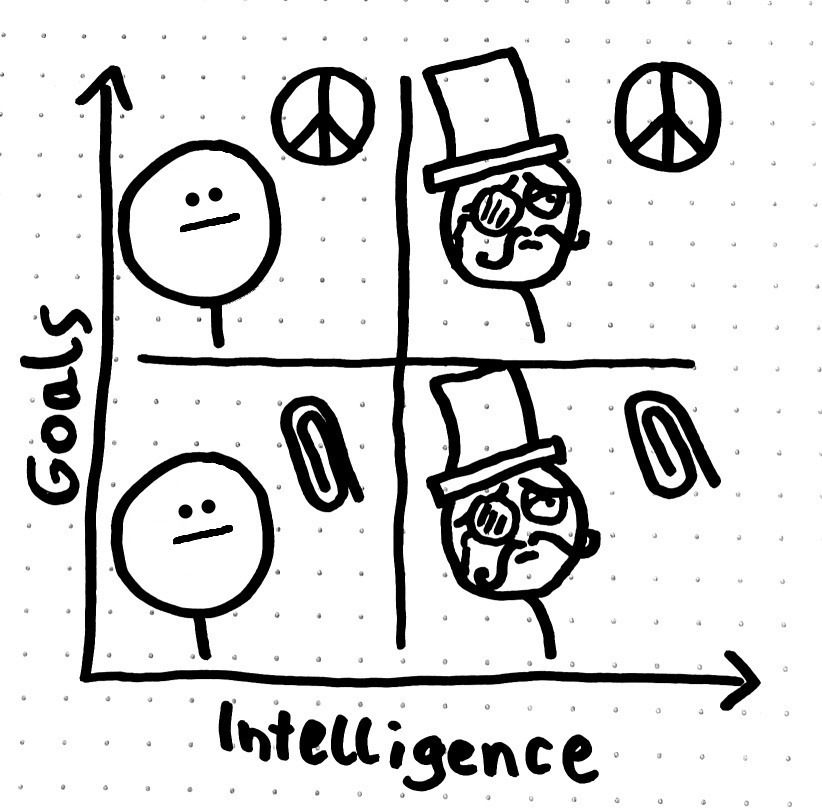

◎ Any level of intelligence can in principle pursue any goal. Most importantly, in the bottom right quadrant, we see that even a very advanced AI can care about something very basic such as creating paperclips. It doesn't necessarily prefer to pursue "higher" goals such as world peace.

◎ Any level of intelligence can in principle pursue any goal. Most importantly, in the bottom right quadrant, we see that even a very advanced AI can care about something very basic such as creating paperclips. It doesn't necessarily prefer to pursue "higher" goals such as world peace.

Basic

The orthogonality thesis is the idea that an AI can have any level of intelligence, from very dumb to very smart, while still having the same main goal. Imagine several chess robots. One robot might be really good at chess (more intelligent), while another might not be (less intelligent). But both robots have the same main goal: to win the game.

Intermediate

The orthogonality thesis postulates that an agent’s level of intelligence is orthogonal—essentially independent—to its final objectives. In machine learning, intelligence is the efficiency and capability of an algorithm, while the goal is like the objective function it aims to optimize. The thesis states that you can change one without affecting the other.

In practical terms, this implies that as we develop increasingly advanced AI systems, simply increasing their “intelligence” (e.g., the ability to learn, adapt, or solve problems) doesn’t automatically align them with human-friendly objectives. A highly intelligent AI can have a trivial or even dangerous goal if not properly constrained. If its objective function is not aligned with human values, it will nonetheless optimize for that objective in effective ways, regardless of the broader impact.

Advanced

- https://arbital.com/p/orthogonality/

- https://philosophicaldisquisitions.blogspot.com/2014/07/bostrom-on-superintelligence-1.html

- https://www.fhi.ox.ac.uk/wp-content/uploads/Orthogonality_Analysis_and_Metaethics-1.pdf

- https://www.alignmentforum.org/tag/orthogonality-thesis

- Intelligence and Stupidity: The Orthogonality Thesis https://www.youtube.com/watch?v=hEUO6pjwFOo